In late 2020, a working group was established to use causal models to study incentive concepts, and their application to AI safety. It was founded by Ryan Carey (FHI) and Tom Everitt (DeepMind) and includes other researchers from Oxford and the University of Toronto.

Since the formation of the working group, it has published four papers in major AI conferences and journals:

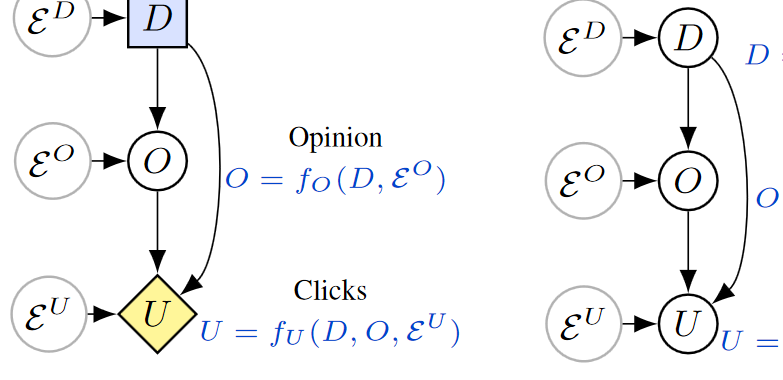

Agent Incentives: A Causal Perspective: presents sound and complete graphical criteria for four incentive concepts: value of information, value of control, response incentives, and control incentives.

T. Everitt*, R. Carey*, E. Langlois*, PA. Ortega, S. Legg. (* denotes equal contribution)

AAAI-21

How RL Agents Behave When Their Actions Are Modified: studies how user interventions affect the learning of different RL algorithms.

E. Langlois, T. Everitt.

AAAI-21

Equilibrium Refinements for Multi-Agent Influence Diagrams: Theory and Practice: introduces a notion of subgames in multi-agent (causal) influence diagrams, alongside classic equilibrium refinements. The paper also reports on pycid.

L. Hammond, J. Fox, T. Everitt, A. Abate, M. Wooldridge.

AAMAS-21

Reward tampering problems and solutions in reinforcement learning: A causal influence diagram perspective analyzes various reward tampering (aka “wireheading”) problems with causal influence diagrams.

T. Everitt, M. Hutter, R. Kumar, V. Krakovna

Accepted to Synthese, 2021

More information about the working group can be found here.