How can AI systems learn safely in the real world? Self-driving cars have safety drivers, people who sit in the driver’s seat and constantly monitor the road, ready to take control if an accident looks imminent. Could reinforcement learning systems also learn safely by having a human overseer?

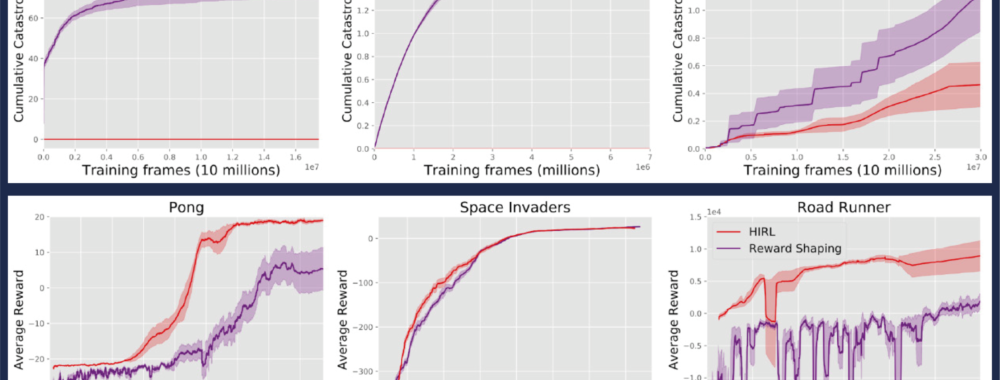

HIRL: Human Intervention RL

Deep Reinforcement Learning (“Deep RL”) has made startling progress in Go, in Atari videogames, and in navigation and control tasks in realistic 3D environments. These milestones have come in simulated environments. Will Deep RL translate this success into real-world tasks?

There are two major obstacles. The first discussed in this paper is that Deep RL requires a huge number of observations (which are slow and expensive to obtain in real-world tasks). The second obstacle for real-world application of RL is safety. Model-free RL agents learn only through trial and error. To learn to avoid a catastrophe, they first need to cause a catastrophe. In Atari games it’s fine for an RL agent to die countless times during training. Yet in real-world tasks there are catastrophes that are never acceptable.